My Blog Posts, in Reverse Chronological Order

subscribe via RSS or by signing up with your email here.

Offline (Batch) Reinforcement Learning: A Review of Literature and Applications

Reinforcement learning is a promising technique for learning how to perform tasks through trial and error, with an appropriate balance of exploration and exploitation. Offline Reinforcement Learning, also known as Batch Reinforcement Learning, is a variant of reinforcement learning that requires the agent to learn from a fixed batch of data without exploration. In other words, how does one maximally exploit a static dataset? The research community has grown interested in this in part because larger datasets are available that might be used to train policies for physical robots. Exploration with a physical robot may risk damage to robot hardware or surrounding objects. In addition, since offline reinforcement learning disentangles exploration from exploitation, it can help provide standardized comparisons of the exploitation capability of reinforcement learning algorithms.

Offline reinforcement learning, henceforth Offline RL, is closely related to imitation learning (IL) in that the latter also learns from a fixed dataset without exploration. However, there are several key differences.

-

Offline RL algorithms (so far) have been built on top of standard off-policy Deep Reinforcement Learning (Deep RL) algorithms, which tend to optimize some form of a Bellman equation or TD difference error.

-

Most IL problems assume an optimal, or at least a high-performing, demonstrator which provides data, whereas Offline RL may have to handle highly suboptimal data.

-

Most IL problems do not have a reward function. Offline RL considers rewards, which furthermore can be processed after-the-fact and modified.

-

Some IL problems require the data to be labeled as expert versus non-expert. Offline RL does not make this assumption.

I preface the IL descriptions with “some” and “most” because there are exceptions to every case and that the line between methods is not firm, as I emphasized in a blog post about combining IL and RL.

Offline RL is therefore about deriving the best policy possible given the data. This gives us the hope of out-performing the demonstration data, which is still often a difficult problem for imitation learning. To be clear, in tabular settings with infinite state visitation, it can be shown that algorithms such as Q-learning converge to an optimal policy despite potentially sub-optimal off-policy data. However, as some of the following papers show, even “off-policy” Deep RL algorithms such as the Deep Q-Network (DQN) algorithm require substantial amounts of “on-policy” data from the current behavioral policy in order to learn effectively, or else they risk performance collapse.

For a further introduction to Offline RL, I refer you to (Lange et al, 2012). It provides an overview of the problem, and presents Fitted Q Iteration (Ernst et al., 2005) as the “Q-Learning of Offline RL” along with a taxonomy of several other algorithms. While useful, (Lange et al., 2012) is mostly a pre-deep reinforcement learning reference which only discusses up to Neural Fitted Q-Iteration and their proposed variant, Deep Fitted Q-Iteration. The current popularity of deep learning means, to the surprise of no one, that recent Offline RL papers learn policies parameterized by deeper neural networks and are applied to harder environments. Also, perhaps unsurprisingly, at least one of the authors of (Lange et al., 2012), Martin Riedmiller, is now at DeepMind and appears to be working on … Offline RL.

In the rest of this post, I will summarize my view of the Offline RL literature. From my perspective, it can be roughly split into two categories:

-

those which try and constrain the reinforcement learning to consider actions or state-action pairs that are likely to appear in the data.

-

those which focus on the dataset, either by maximizing the data diversity or size while using strong off-policy (but not specialized to the offline setting) algorithms, or which propose new benchmark environments.

I will review the first category, followed by the second category, then end with a summary of my thoughts along with links to relevant papers.

As of May 2020, there is a recent survey from Professor Sergey Levine of UC Berkeley, whose group has done significant work in Offline RL. I began drafting this post well before the survey was released but engaged in my bad “leave the draft alone for weeks” habit. Professor Levine chooses a different set of categories, as his papers cover a wider range of topics, so hopefully this post provides an alternative yet useful perspective.

Off-Policy Deep Reinforcement Learning Without Exploration

(Fujimoto et al., 2019) was my introduction to Offline RL. I have a more extensive blog post which dissects the paper, so I’ll do my best to be concise in this post. The main takeaway is showing that most “off-policy algorithms” in deep RL will fail when solely shown off-policy data due to extrapolation error, where state-action pairs $(s,a)$ outside the data batch can have arbitrarily inaccurate values, which adversely affects algorithms that rely on propagating those values. In the online setting, exploration would be able to correct for such values because one can get ground-truth rewards, but the offline case lacks that luxury.

The proposed algorithm is Batch Constrained deep Q-learning (BCQ). The idea is to run normal Q-learning, but in the maximization step (which is normally $\max_{a’} Q(s’,a’)$), instead of considering the max over all possible actions, we want to only consider actions $a’$ such that $(s’,a’)$ actually appeared in the batch of data. Or, in more realistic cases, eliminate actions which are unlikely to be selected by the behavior policy $\pi_b$ (the policy that generated the static data).

BCQ trains a generative model — a Variational AutoEncoder — to generate actions that are likely to be from the batch, and a perturbation model which further perturbs the action. At test-time rollouts, they sample $N$ actions via the generator, perturb each, and pick the action with highest estimated Q-value.

They design experiments as follows, where in all cases there is a behavioral DDPG agent which generates the batch of data for Offline RL:

-

Final Buffer: train the behavioral agent for 1 million steps with high exploration, and pool all the logged data into a replay buffer. Train a new DDPG agent from scratch, only on that replay buffer with no exploration. Since the behavioral agent will have been learning along those 1 million steps, there should be high “state coverage.”

-

Concurrent: as the behavioral agent learns, train a new DDPG agent concurrently (hence the name) on the behavioral DDPG replay buffer data. Again, there is no exploration for the new DDPG agent. The two agents should have identical replay buffers throughout learning.

-

Imitation Learning: train the behavioral agent until it is sufficiently good, then run it for 1 million steps (potentially with more noise to increase state coverage) to get the replay buffer. The difference with “final buffer” is that the 1 million steps are all from the same policy, whereas the final buffer was throughout 1 million steps, which may have resulted in many, many gradient updates depending on the gradient-to-env-steps hyper-parameter.

The biggest surprise is that even in the concurrent setting, the new DDPG agent fails to learn well! To be clear: the agents start at the beginning with identical replay buffers, and the offline agent draws minibatches directly from the online agent’s buffer. I can only think of a handful of differences in the training process: (1) the randomness in the initial policy and (2) noise in minibatch sampling. Am I missing anything? Those factors should not be significant enough to lead to divergent performance. In contrast, BCQ is far more effective at learning offline from the given batch of DDPG data.

When reading papers, I often find myself wondering about the relationship between algorithms in batches (pun intended) of related papers. Conveniently, there is a NeurIPS 2019 workshop paper where Fujimoto benchmarks algorithms. Let’s turn to that.

Benchmarking Batch Deep Reinforcement Learning Algorithms

This solid NeurIPS 2019 workshop paper, by the same author of the BCQ paper, makes a compelling case for the need to evaluate Batch RL algorithms under unified settings. Some research, such as his own, shows that commonly-used off policy DeepRL algorithms fail to learn in an offline fashion, whereas (Agarwal et al., 2020) counter this, but with the caveat of using a much larger dataset.

One of the nice things about the paper is that it surveys some of the algorithms researchers have used for Batch RL, including Quantile Regression DQN (QR-DQN), Random Ensemble Mixture (REM), Batch Constrained Deep Q-Learning (BCQ), Bootstrapping Error Accumulation Reduction Q-Learning (BEAR-QL), KL-Control, and Safe Policy Improvement with Baseline Bootstrapping DQN (SPIBB-DQN). All these algorithms are specialized for the Batch RL setting with the exception of QR-DQN, which is a strong off-policy algorithm shown to work well in an offline setting.

Now, what’s the new algorithm that Fujimoto proposes? It’s a discrete version of BCQ. The algorithm is delightfully straightforward:

My “TL;DR”: train a behavior cloning network to predict actions of the behavior policy based on its states. For the Q-function update on iteration $k$, change the maximization over the successor state actions to only consider actions satisfying a threshold:

\[\mathcal{L}(\theta) = \ell_k \left(r + \gamma \cdot \Bigg( \max_{a' \; \mbox{s.t.} \; \frac{G_\omega(a'|s')}{\max \hat{a} \; G_\omega(\hat{a}|s')} > \tau} Q_{\theta'}(s',a') \Bigg) - Q_\theta(s,a) \right)\]When executing the policy during test-time rollouts, we can use a similar threshold:

\[\pi(s) = \operatorname*{argmax}_{a \; \mbox{s.t.} \; \frac{G_\omega(a'|s')}{\max \hat{a} \; G_\omega(\hat{a}|s')} > \tau} Q_\theta(s,a)\]Note the contrast where normally in Q-learning, we’d just do the max or argmax over the entire set of valid actions. Therefore, we will end up ignoring some actions that potentially have high Q-values, but that’s fine (and desirable!) if those actions have vastly over-estimated Q-values.

Some additional thoughts:

-

The parallels are obvious between $G_\omega$ in continuous versus discrete BCQ. In the continuous case, it is necessary to develop a generative model which may be complex to train. In the discrete case, it’s much simpler: run behavior cloning!

-

I was confused about why BCQ does the behavior cloning update of $\omega$ inside the for loop, rather than beforehand. Since the data is fixed, this seems suboptimal since the optimization for $\theta$ will rely on an inaccurate model $G_\omega$ during the first few iterations. After contacting Fujimoto, he agreed that it is probably better to move the optimization before the loop, but his results were not significantly better.

-

There is a $\tau$ parameter we can vary. What happens when $\tau = 0$? Then it’s simple: standard Q-learning, because any action should have non-zero probability from the generative model. Now, what about $\tau=1$? In practice, this is exactly behavior cloning, because when the policy selects actions it will only consider the action with highest $G_\omega$ value, regardless of its Q-value. The actual Q-learning portion of BCQ is therefore completely unnecessary since we ignore the Q-network!

-

According to the appendix, they use $\tau = 0.3$.

There are no theoretical results here; the paper is strictly experimental. The experiments are on nine Atari games. The batch of data is generated from a partially trained DQN agent over 10M steps (50M steps is standard). Note the critical design choice of whether:

- we take a single fixed snapshot (i.e., a stationary policy) and roll it out to get steps, or

- we take logged data from an agent during its training run (i.e., a non-stationary policy).

Fujimoto implements the first case, arguing that it is more realistic, but I think that claim is highly debatable. Since the policy is fixed, Fujimoto injects noise by setting $\epsilon=0.2$ 80% of the time, and setting $\epsilon=0.001$ otherwise. This must be done on a per-episode basis — it doesn’t make sense to change epsilons within an episode!

What are some conclusions from the paper?

-

Discrete BCQ seems to be the best of the “batch RL” algorithms tested. But the curves look really weird: BCQ performance shoots up to be at or slightly above the noise-free policy, but then stagnates! I should also add: exceeding the underlying noise-free policy is nice, but the caveat is that it’s from a partially trained DQN, which is a low bar.

-

For the “standard” off-policy algorithms of DQN, QR-DQN, and REM, QR-DQN is the winner, but still under-performs a noisy behavior policy, which is unsatisfactory. Regardless, trying QR-DQN in an offline setting, even though it’s not specialized for that case, might be a good idea if the dataset is large enough.

-

Results confirm some results from (Agarwal et al., 2020) in that distributional RL aids in exploitation), but that the success they were observing is highly specific to settings Agarwal used: a full 50M history of a teacher’s replay buffer, with a changing snapshot, plus noise from sticky actions.

Here’s a summary of results in their own words:

Although BCQ has the strongest performance, on most games it only matches the performance of the online DQN, which is the underlying noise-free behavioral policy. These results suggest BCQ achieves something closer to robust imitation, rather than true batch reinforcement learning when there is limited exploratory data.

This brings me to one of my questions (or aspirations, if you put it that way). Is it possible to run offline RL, and reliably exceed the noise-free behavior policy? That would be a dream scenario indeed.

Stabilizing Off-Policy Q-Learning via Bootstrapping Error Reduction

This NeurIPS 2019 paper is highly related to Fujimoto’s BCQ paper covered earlier, in that it also focuses on an algorithm to constrain the distribution of actions considered when running Q-learning in a pure off-policy fashion. It identifies a concept known as bootstrapping error which is clearly described in the abstract alone:

We identify bootstrapping error as a key source of instability in current methods. Bootstrapping error is due to bootstrapping from actions that lie outside of the training data distribution, and it accumulates via the Bellman backup operator. We theoretically analyze bootstrapping error, and demonstrate how carefully constraining action selection in the backup can mitigate it.

I immediately thought: what’s the difference between bootstrapping error here versus extrapolation error from (Fujimoto et al., 2019)? Both terms can be used to refer to the same problem of propagating inaccurate Q-values during Q-learning. However, extrapolation error is a broader problem that appears in supervised learning contexts, whereas bootstrapping is specific to reinforcement learning algorithms that rely on bootstrapped estimates.

The authors have an excellent BAIR Blog post which I highly recommend because it provides great intuition on how bootstrapping error affects offline Q-learning on static datasets. For example, this figure below shows that in the second plot, we may have actions $a$ that are outside the distribution of actions (OOD is short for out-of-distribution) induced by the behavior policy $\beta(a|s)$, indicated with the dashed line. Unfortunately, if those actions have $Q(s,a)$ values that are much higher, then they are used in the bootstrapping process for Q-learning to form the targets for Q-learning updates.

Incorrectly high Q-values for OOD actions may be used for backups, leading to

accumulation of error. Figure and caption credit: Aviral Kumar.

They also have results showing that if one runs a standard off-the-shelf off-policy (not offline) RL algorithm, that simply increasing the size of the static dataset does not appear to mitigate performance issues – which suggests the need for further study.

The main contributions of their paper are: (a) theoretical analysis that carefully constraining the actions considered during Q-learning can mitigate error propagation, and (b) a resulting practical algorithm known as “Bootstrapping Error Accumulation Reduction” (BEAR). (I am pretty sure that “BEAR” is meant to be a spin on “BAIR,” which is short for Berkeley Artificial Intelligence Research.)

The BEAR algorithm is visualized below. The intuition is to ensure that the learned policy matches the support of the action distribution from the static data. In contrast, an algorithm such as BCQ focuses on distribution matching (center). This distinction is actually pretty powerful; only requiring a support match is a much weaker assumption, which enables Offline RL to more flexibly consider a wider range of actions so long as the batch of data has used those actions at some point with non-negligible probability.

Illustration of support constraint (BEAR) (right) and distribution-matching

constraint (middle). Figure and caption credit: Aviral Kumar.

To enforce this in practice, BEAR uses what’s known as the Maximum Mean Discrepancy (MMD) distance between actions from the unknown behavior policy $\beta$ and the actor $\pi$. This can be estimated directly from samples. Putting everything together, their policy improvement step for actor-critic algorithms is succinctly represented by Equation 1 from the paper:

\[\pi_\phi := \max_{\pi \in \Delta_{|S|}} \mathbb{E}_{s \sim \mathcal{D}} \mathbb{E}_{a \sim \pi(\cdot|s)} \left[ \min_{j=1,\ldots,K} \hat{Q}_j(s, a)\right] \quad \mbox{s.t.} \quad \mathbb{E}_{s \sim \mathcal{D}} \Big[ \text{MMD}(\mathcal{D}(\cdot|s), \pi(\cdot|s)) \Big] \leq \varepsilon\]The notation is described in the paper, but just to clarify: $\mathcal{D}$ represents the static data of transitions collected by behavioral policy $\beta$, and the $j$ subscripts are from the ensemble of Q-functions used to compute a conservative estimate of Q-values. This is the less interesting aspect of the policy update as compared to the MMD constraint; in fact the BAIR Blog post doesn’t include the ensemble in the policy update. As far as I can tell, there is no ablation study that tests just using one or two Q-networks, so I wonder which of the two is more important: the ensemble of networks, or the MMD constraint?

The most closely related algorithm to BEAR is the previously-discussed BCQ (Fujimoto et al., 2019). How do they compare? The BEAR authors (Kumar et al., 2019) claim:

-

Their theory shows convergence properties under weaker assumptions, and they are able to bound the suboptimality of their approach.

-

BCQ is generally better when off-policy data is collected by an expert, but BEAR is better when data is collected by a weaker (or even random) policy. They claim this is because BCQ too aggressively constrains the distribution of actions, and this matches the interpretation of BCQ as matching the distribution of the policy of the data batch, whereas BEAR focuses on only matching the action support.

Upon reading this, I became curious to see if there’s a way to combine the strengths of both of the algorithms. I am also not entirely convinced that MuJoCo is the best way to evaluate these algorithms, so we should hopefully look at what other datasets might appear in the future so that we can perform more extensive comparisons of BEAR and BCQ.

At this point, we now consider papers that are in the second category – those which, rather than constrain actions in some way, focus on investigating what happens with a large and diverse dataset while maximizing the exploitation capacity of standard off-policy Deep RL algorithms.

An Optimistic Perspective on Offline Reinforcement Learning

Unlike the prior papers, which present algorithms to constrain the set of considered actions, this paper argues that it is not necessary to use a specialized Offline RL algorithm. Instead, use a stronger off-policy Deep RL algorithm with better exploitation capabilities. I especially enjoyed reading this paper, since it gave me insights on off-policy reinforcement learning, and the experiments are also clean and easy to understand. Surprisingly, it was rejected from ICLR 2020, and I’m a little concerned about how a paper with this many convincing experimental results can get rejected. The reviewers also asked why we should care about Offline RL, and the authors gave a rather convincing response! (Fortunately, the paper eventually found a home at ICML 2020.)

Here is a quick summary of the paper’s experiments and contributions. When discussing the paper or referring to figures, I am referencing the second version on arXiv, which corresponds to the ICLR 2020 submission and used “Batch RL” instead of “Offline RL” so we’ll use both terms interchangeably. The paper was previously titled “Striving for Simplicity in Off-Policy Deep Reinforcement Learning.”

-

To form the batch for Offline RL, they use logged data from 50M steps of standard online DQN training. In general, one step is four environment frames, so this matches the 200M frame case which is standard for Atari benchmarks. I believe the community has settled on the 1 step to 4 frame ratio. As discussed in (Machado et al., 2018), to introduce stochasticity, the agents employ sticky actions. So, given this logged data, let’s run Batch RL, where we run off-policy deep Q-learning algorithms with a 50M-sized replay buffer, and sample items uniformly.

-

They show that the off-policy, distributional-based DeepRL algorithms Categorical DQN (i.e., C51) and Quantile Regression DQN (i.e., QR-DQN), when trained solely on that logged data (i.e., in an offline setting), actually outperform online DQN!! See Figure 2 in the paper, for example. Be careful about what this claims means: C51 and QR-DQN are already known to be better than vanilla DQN, but the experiments show that even in the absence of exploration for those two methods, they still out-perform online (i.e., with exploration) DQN.

-

Incidentally, offline C51 and offline QR-DQN also out-perform offline DQN, which as expected, is usually worse than online DQN. (To be fair, Figure 2 suggests that in 10-15 out of 60 games, offline DQN can actually outperform the online variant.) Since the experiments disentangle exploration from exploitation, we can explain the difference between performance of offline DQN versus offline C51 or QR-DQN as due to exploitation capability.

-

Thus so far we have the following algorithms, from worst to best with respect to game score: offline DQN, online DQN, offline C51, and offline QR-DQN. They did not present a full result of offline C51 except for a few games in the Appendix but I’m assuming that QR-DQN would be better in both offline and online cases. In addition, I also assume that online C51 and online QR-DQN would outperform their offline variants, at least if their offline variants are trained on DQN-generated data.

-

To add further evidence that improving the base off-policy Deep RL algorithm can work well in the Batch RL setting, their results in Figure 4 suggest that using Adam as the optimizer instead of RMSprop for DQN is by itself enough to get performance gains. In that this offline DQN can even outperform online DQN on average! I’m not sure how much I can believe this result, because Adam can’t offer that much of an improvement, right?

-

They also experiment with a continuous control variant, using 1M samples from a logged training run of DDPG. They apply Batch-Constrained Q-learning from (Fujimoto et al., 2019) as discussed above, and find that it performs reasonably well. But they also find that they can simply use Twin-Delayed DDPG (i.e., TD3) from (Fujimoto et al., 2018) (yes, the same guy!) and train normally in an off-policy fashion to get better results than offline DDPG. Since TD3 is known as a stronger off-policy continuous control deep Q-learning algorithm than DDPG, this further bolsters the paper’s claims that all we need is a stronger off-policy algorithm for effective Batch RL.

-

Finally, from the above observations, they propose their Random Ensemble Mixture (REM) algorithm, which uses an ensemble of Q-networks and enforces Bellman consistency among random convex combinations. This is similar to how Dropout works. There are offline and online versions of it. In the offline setting, REM outperforms C51 and QR-DQN despite being simpler. By “simpler” the authors mainly refer to not needing to estimate a full distribution of the value function for a given state, as distributional methods do.

That’s not all they did. In an older version of the paper, they also tried experiments with logged data from a training run of QR-DQN. However, the lead author told me he removed those results since there were too many experiments which were confusing readers. In addition, for logged data from training QR-DQN, it is necessary to train an even stronger off-policy Deep RL algorithm to out-perform the online QR-DQN algorithm. I have to admit, sometimes I was also losing track of all the experiments being run in this paper.

Here is a handy visualization of some algorithms involved in the paper: DQN, QR-DQN, Ensemble-DQN (their baseline) and REM (their algorithm):

My biggest takeaway from reading this paper is that in Offline RL, the quality of the data matters significantly, and it is better to use data from many different policies rather than one fixed policy. That they get logged data from a training run means that, literally, every four steps, there was a gradient update to the policy parameters and thus a change to the policy itself. This induces great diversity in the data for Offline RL. Indeed, (Fujimoto et al., 2019) argues that the success of REM and off-policy algorithms more generally depends on the training data composition. Thus, it is not generally correct to think of these papers contradicting each other; they are more accurately thought of as different ways to achieve the same goal. Perhaps the better way going forward is simply to use larger and larger datasets with strong off-policy algorithms, while also perhaps specializing those off-policy algorithms for the batch setting.

IRIS: Implicit Reinforcement without Interaction at Scale for Learning Control from Offline Robot Manipulation Data

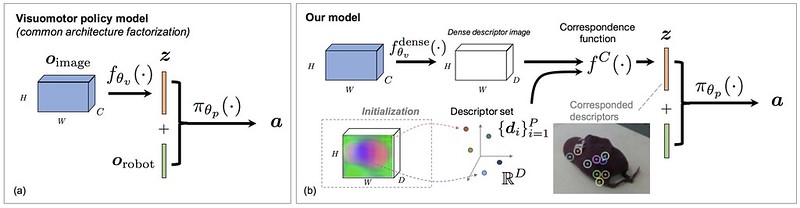

This paper proposes the algorithm IRIS: Implicit Reinforcement without Interaction at Scale. It is specialized for offline learning from large-scale robotics datasets, where the demonstrations may be either suboptimal or highly multi-modal. The algorithm is motivated by the same off-policy, Batch RL considerations as other papers I discuss here, and I found this paper because it cited a bunch of them. Their algorithm is visualized below:

To summarize:

-

IRIS splits control into “high-level” and “low-level” controllers. The high-level mechanism, at a given state $s_t$, must pick a new goal state $s_g$. Then, the low-level mechanism is conditioned on that goal state, and produces the actual actions $a \sim \pi_{im} (s_t | s_g)$ to take.

-

The high-level policy is split in two parts. The first samples several goal proposals. The second picks the best goal proposal to pass to the low-level controller.

-

The low-level controller, given the goal $s_g$, takes $T$ actions conditioned on that goal. Then, it returns control to the high level policy, which re-samples the goal state.

-

The episode terminates when the agent gets sufficiently close to the true goal state. This is a continuous state domain, so they simply pick a distance threshold to the state. They are also in the sparse reward domain, adding another challenge.

How are the components trained?

-

The first part of the high-level controller uses a goal conditional Variational AutoEncoder (cVAE). Given a sequence of states in the data, IRIS samples pairs that are $T$ time steps apart, i.e., $(s_t, s_{t+T})$. The encoder $E(s_{t},s_{t+T})$ maps the tuple to a set of latent variables for a Gaussian, i.e., $\mu, \sigma =E(s_{t},s_{t+T})$. The decoder must construct the future state: $\hat{s}_{t+T} \sim D(s_t, z)$ where $z$ is a Gaussian sampled from $\mu$ and $\sigma$. This is for training; for test time, they sample $z$ from a standard normal $z \sim \mathcal{N}(0,1)$ (with regularization during training) and pass it to the decoder, so that it produces goal states.

-

The second part uses an action cVAE as part of their simpler variant of Batch Constrained Deep Q-learning (discussed at the beginning of this blog post) for the value function in the high-level controller. This cVAE, rather than predicting goals, will predict actions conditioned on a state. This can be trained by sampling state-action pairs $(s_t,a_t)$ and having the cVAE predict $a_t$. They can then use it in their BCQ algorithm because the cVAE will model actions that are more likely to be part of the training data.

-

The low-level controller is a recurrent neural network that, given $s_t$ and $s_g$, produces $a_t$. It is trained with behavior cloning, and therefore does not use Batch RL. But, how does one get the goal? It’s simple: since IRIS assumes the low-level controller runs for a fixed number of steps (i.e., $T$ steps) then they take consecutive state-action sequences of length $T$ and then treat the last state as the goal. Intuitively, the low-level controller trained this way will be able to figure out how to get from a start state to a “goal” state in $T$ steps, where “goal” is in quotes because it is not a true environment goal but one which we artificially set for training. This reminds me of Hindsight Experience Replay, which I have previously dissected.

Some other considerations:

-

They argue that IRIS is able to handle diverse solutions because the goal cVAE can sample different goals, to explicitly take diversity into account. Meanwhile, the low-level controller only has to model short-horizon goals at a time “resolution” that does not easily permit many solutions.

-

They argue that IRIS can handle off-policy data because their BCQ will limit actions to those likely to be generated by the data, and hence the value function (which is used to select the goal) will be more accurate.

-

They split IRIS into higher and lower level controllers because in theory this may help to handle for suboptimal demonstrations — the high-level controller can pick high value goals, and the low-level controller just has to get from point A to point B. This is also pretty much why people like hierarchies in general.

Their use of Batch RL is interesting. Rather than using it to train a policy, they are only using it to train a value function. Thus, this application can be viewed as similar to papers that are concerned with off-policy RL but only for the purpose of evaluating states. Also, why do they argue their variant of BCQ is simpler? I think it is because they eschew from training a perturbation model, which was used to optimally perturb the actions that are used for candidates. They also don’t seem to use a twin critic.

They evaluate IRIS on three datasets. Two use their prior work, RoboTurk. You can see an overview on the Stanford AI Blog here. I have not used RoboTurk before so it may be hard for me to interpret their results.

-

Graph Reach: they use a simple 2D navigation example, which is somewhat artificial but allows for easy testing of multi-modal and suboptimal examples. Navigation tasks are also present in other papers that test for suboptimal demonstrations, such as SAVED from Ken Goldberg’s lab.

-

Robosuite Lift: this involves the Robosuite Lift data, where a single human performed teleoperation (in simulation) using RoboTurk, to lift an object. The human intentionally used suboptiomal demonstrations..

-

RoboTurk Can Pick and Place: now they use a pick-and-place task, this time using RoboTurk to get a diverse set of samples due to using different human operators. You can see an overview on the Stanford AI Blog here. Again, I have not used Roboturk, but it appears that this is the most “natural” of the environments tested.

Their experiments benchmark against BCQ, which is a reasonable baseline.

Overall, I think this paper has a mix of both the “action constraining” algorithms discussed in this blog post, and the “learning from large scale datasets” papers. It was the first to show that offline RL could be used as part of the process for robot manipulation. Another project that did something similar, this time with physical robots, is from DeepMind, to which we now turn.

Scaling Data-driven Robotics with Reward Sketching and Batch Reinforcement Learning

This recent DeepMind paper is the third one I discuss which highlights the benefits of a large, massive offline dataset (which they call “NeverEnding Storage”) coupled with a strong off-policy reinforcement learning algorithm. It shows what is possible when combining ideas from reinforcement learning, human-computer interaction, and database systems. The approach consists of five major steps, as nicely indicated by the figure:

In more detail, they are:

-

Get demonstrations. This can be from a variety of sources: human teleoperation, scripted policies, or trained policies. At first, the data is from human demonstrations or scripted policies. But, as robots continue to train and perform tasks, their own trajectories are added to the NeverEnding Storage. Incidentally, this paper considers the multi-task setup, so the policies act on a variety of tasks, each of which has its own starting conditions, particular reward, etc.

-

Reward sketching. A subset of the data points are selected for humans to indicate rewards. Since it involves human intervention, and because reward design is fiendishly difficult, this part must be done with care, and certainly cannot be done by having humans slowly and manually assign a number to every frame. (I nearly vomit when simply thinking about doing that.) The authors cleverly engineered a GUI where a human can literally sketch a reward, hence the name reward sketching, to seamlessly get rewards (between 0 and 1) for each frame.

-

Learning the reward. The system trains a reward function neural network $r_\psi$ to predict task-specific (dense) rewards from frames (i.e., images). Rather than regress directly on the sketched values, the proposed approach involves taking two frames $x_t$ and $x_q$ within the same episode, and enforcing consistency conditions with the reward functions via hinge losses. Clever! When the reward function updates, this can trigger retroactive re-labeling of rewards per time step in the NES.

-

Batch RL. A specialized Batch RL algorithm is not necessary because of the massive diversity of the offline dataset, though they do seem to train task-specific policies. They use a version of D4PG, short for “Distributed Distributional Deep Deterministic Policy Gradients” which is … a really good off-policy RL algorithm! Since the NES contains data from many tasks, if they are trying to optimize the learned reward for a task, they will draw 75% of the minibatch from all of the NES, and draw the remaining 25% from task-specific episodes. I instantly made the connection to DeepMind’s “Observe and Look Further (arXiv 2018)” paper (see my blog post here) which implements a 75-25 minibatch ratio among demonstrator and agent samples.

-

Evaluation. Periodically evaluate the robot and add new demonstrations to NES. Their experiments consist of a Sawyer robot facing a 35 x 35 cm basket of objects, and the tasks generally involve grasping objects or stacking blocks.

-

Go back to step (1) and repeat, resulting in over 400 hours of video data.

There is human-in-the-loop involved, but they argue (reasonably, I would add) that reward sketching is a relatively simple way of incorporating humans. Furthermore, while human demonstrations are necessary, those are ideally drawn from existing datasets.

They say they will release their dataset so that it can facilitate development of subsequent Batch RL algorithms, though my impression is that we might as well deploy D4PG, so I am not sure if this will spur more Batch RL algorithms. On a related note, if you are like me and have trouble following all of the “D”s in the algorithm and all of DeepMind’s “state of the art” reinforcement learning algorithms, DeepMind has a March 31 blog post summarizing the progression of algorithms on Atari. I wish we had something similar for continuous control, though.

Here are some comparisons between this and the ones from (Agarwal et al., 2020) and (Mandlekar et al., 2020) previously discussed:

-

All papers deal with Batch RL from a large set of robotics-related data, though the datasets themselves differ: Atari versus RoboTurk versus this new dataset, which will hopefully be publicly available. This paper appears to be the only one capable of training Batch RL policies to perform well on new tasks. The analogue for Atari would be training a Batch RL agent on several games, and then applying it (or fine-tuning it) to a new Atari game, but I don’t think this has been done.

-

This paper agrees with the conclusions of (Agarwal et al., 2020) that having a sufficiently large and diverse dataset is critical to the success of Offline RL.

-

This paper uses D4PG as a very powerful, offline RL algorithm for learning policies, whereas (Agarwal et al., 2020) proposes a simpler version of Quantile-Regression DQN for discrete control, and (Mandlekar et al., 2020) only use Batch RL to train a value function instead of a policy.

-

This paper proposes the novel reward sketching idea, whereas (Agarwal et al., 2020) only use environments that give dense rewards, and (Mandlekar et al., 2020) use environments with sparse rewards that indicate task success.

-

This paper does not factorize policies into lower and higher level controllers, unlike (Mandkelar et al., 2020), though I assume in principle it is possible to merge the ideas.

In addition to the above comparisons, I am curious about the relationship between this paper and RoboNet from CoRL 2019. It seems like both projects are motivated by developing large datasets for robotics research, though the latter may be more specialized to visual foresight methods, but take my judgment with a grain of salt.

Overall, I have hope that, with disk space getting cheaper and cheaper, we will eventually have robots deployed in fleets that can draw upon this storage in some way.

Concluding Remarks and References

What are some of the common themes or thoughts I had when reading these and related papers? Here are a few:

-

When reading these papers, take careful note as to whether the data is generated from a non-stationary or a stationary policy. Furthermore, how diverse is the dataset?

-

The “data diversity” and “action constraining” aspects of this literature may be complementary, but I am not sure if anyone has shown how well those two mix.

-

As I mention in my blog posts, it is essential to figure out ways that an imitator can outperform the expert. While this has been demonstrated with algorithms that combine RL and IL with exploration, the Offline RL setting imposes extra constraints. If RL is indeed powerful enough, maybe it is still able to outperform the demonstrator in this setting. Thus, when developing algorithms for Offline RL, merely meeting the demonstrator behavior is not sufficient.

Happy offline reinforcement learning!

Here is a full listing of the papers covered in this blog post, in order of when I introduced the paper.

-

Sascha Lange, Thomas Gabel, Martin Riedmiller. Batch Reinforcement Learning. Book Chapter, “Reinforcement Learning: State of the Art,” 2012.

-

Sergey Levine, Aviral Kumar, George Tucker, Justin Fu. Offline Reinforcement Learning: Tutorial, Review, and Perspectives on Open Problems, arXiv 2020.

-

Scott Fujimoto, David Meger, Doina Precup. Off-Policy Deep Reinforcement Learning without Exploration, ICML 2019.

-

Scott Fujimoto, Edoardo Conti, Mohammad Ghavamzadeh, Joelle Pineau. Benchmarking Batch Deep Reinforcement Learning Algorithms, NeurIPS 2019 workshop.

-

Aviral Kumar, Justin Fu, George Tucker, Sergey Levine. Stabilizing Off-Policy Q-Learning via Bootstrapping Error Reduction, NeurIPS 2019.

-

Rishabh Agarwal, Dale Schuurmans, Mohammad Norouzi. An Optimistic Perspective on Offline Reinforcement Learning, ICML 2020.

-

Ajay Mandlekar, Fabio Ramos, Byron Boots, Silvio Savarese, Li Fei-Fei, Animesh Garg, Dieter Fox. IRIS: Implicit Reinforcement without Interaction at Scale for Learning Control from Offline Robot Manipulation Data, ICRA 2020.

-

Serkan Cabi, Sergio Gómez Colmenarejo, Alexander Novikov, Ksenia Konyushkova, Scott Reed, Rae Jeong, Konrad Zolna, Yusuf Aytar, David Budden, Mel Vecerik, Oleg Sushkov, David Barker, Jonathan Scholz, Misha Denil, Nando de Freitas, Ziyu Wang. Scaling Data-driven Robotics with Reward Sketching and Batch Reinforcement Learning, RSS 2020.

Finally, here are another set of Offline RL or related references that I didn’t have time to cover, but I will likely modify this post in the future, especially given that I already have summary notes to myself on most of these papers (but they are not yet polished enough to post on this blog).

-

Romain Laroche, Paul Trichelair, Rémi Tachet des Combes. Safe Policy Improvement with Baseline Bootstrapping, ICML 2019.

-

Natasha Jaques, Asma Ghandeharioun, Judy Hanwen Shen, Craig Ferguson, Agata Lapedriza, Noah Jones, Shixiang Gu, Rosalind Picard. Way Off-Policy Batch Deep Reinforcement Learning of Human Preferences in Dialog, arXiv 2019.

-

Xinyue Chen, Zijian Zhou, Zheng Wang, Che Wang, Yanqiu Wu, Keith Ross. BAIL: Best-Action Imitation Learning for Batch Deep Reinforcement Learning, arXiv 2019.

-

Yifan Wu, George Tucker, Ofir Nachum. Behavior Regularized Offline Reinforcement Learning, arXiv 2019.

-

Noah Y. Siegel, Jost Tobias Springenberg, Felix Berkenkamp, Abbas Abdolmaleki, Michael Neunert, Thomas Lampe, Roland Hafner, Nicolas Heess, Martin Riedmiller. Keep Doing what Worked: Behavior Modelling Priors for Offline Reinforcement Learning, ICLR 2020.

-

Justin Fu, Aviral Kumar, Ofir Nachum, George Tucker, Sergey Levine. D4RL: Datasets for Deep Data-Driven Reinforcement Learning. arXiv 2020.

-

Aviral Kumar, Abhishek Gupta, Sergey Levine. DisCor: Corrective Feedback in Reinforcement Learning via Distribution Correction, arXiv 2020.

-

Aviral Kumar, Aurick Zhou, George Tucker, Sergey Levine. Conservative Q-Learning for Offline Reinforcement Learning, arXiv 2020.

-

Ashvin Nair, Murtaza Dalal, Abhishek Gupta, Sergey Levine. Accelerating Online Reinforcement Learning with Offline Datasets, arXiv 2020.

-

Tatsuya Matsushima, Hiroki Furuta, Yutaka Matsuo, Ofir Nachum, Shixiang Gu. Deployment-Efficient Reinforcement Learning via Model-Based Offline Optimization, arXiv 2020.

-

Rahul Kidambi, Aravind Rajeswaran, Praneeth Netrapalli, Thorsten Joachims. MOReL: Model-Based Offline Reinforcement Learning, arXiv 2020.

-

Tianhe Yu, Garrett Thomas, Lantao Yu, Stefano Ermon, James Zou, Sergey Levine, Chelsea Finn, Tengyu Ma. MOPO: Model-based Offline Policy Optimization, arXiv 2020.

-

Ziyu Wang, Alexander Novikov, Konrad Żołna, Jost Tobias Springenberg, Scott Reed, Bobak Shahriari, Noah Siegel, Josh Merel, Caglar Gulcehre, Nicolas Heess, Nando de Freitas. Critic Regularized Regression, arXiv 2020.

There is also extensive literature on off-policy evaluation, without necessarily focusing on policy optimization or deploying learned policies in practice. I did not focus on these as much since I wanted to discuss work that trains policies in this post.

I hope this post was helpful! As always, thank you for reading.

Getting Started with Blender for Robotics

Blender is a popular open-source computer graphics software toolkit. Most of its users probably use it for its animation capabilities, and it’s often compared to commercial animation software such as Autodesk Maya and Autodesk 3ds Max. Over the last one and a half years, I have used Blender’s animation capabilities for my ongoing robotics and artificial intelligence research. With Blender, I can programmatically generate many simulated images which then form the training dataset for deep neural network robotic policies. Since implementing domain randomization is simple in Blender, I can additionally perform Sim-to-Real transfer. In this blog post, and hopefully several more, I hope to demonstrate how to get started with Blender, and more broadly to make the case for Blender in AI research.

As of today’s writing, the latest version is Blender 2.83, which one can download from its website for Windows, Mac, or Linux. I use the Mac version on my laptop for local tests and the Linux version for large-scale experiments on servers. When watching older videos of Blender or borrowing related code, be aware that there was a significant jump between Blender 2.79 and Blender 2.80. By comparison, the gap between versions 2.80 to 2.83 is minor.

Installing Blender is usually straightforward. On Linux systems, I use wget

to grab the file online from the list of releases here. Suppose one wants

to use version 2.82a, which is the one I use these days. Simply scroll to the

appropriate release, right-click the desired file, and copy the link. I then

paste it after wget and run the command:

wget https://download.blender.org/release/Blender2.82/blender-2.82a-linux64.tar.xz

This should result in a *.tar.xz file, which for me was 129M. Next, run:

tar xvf blender-2.82a-linux64.tar.xz

The v is optional and is just for verbosity. To check the installation, cd

into the resulting Blender directory and type ./blender --version. In

practice, I recommend setting an alias in the ~/.bashrc like this:

export PATH=${HOME}/blender-2.82a-linux64:$PATH

which assumes I un-tarred it in my home directory. The process for installing

on a Mac is similar. This way, when typing in blender, the software will open

up and produce this viewer:

The starting cube shown above is standard in default Blender scenes. There’s a lot to process here, and there’s a temptation to check out all the icons to see all the options available. I recommend resisting this temptation because there’s way too much information. I personally got started with Blender by watching this set of official YouTube video tutorials. (The vast majority have automatic captions that work well enough, but a few strangely have captions in different languages despite how the audio is clearly in English.) I believe these are endorsed by the developers, or even provided by them, which attests to the quality of its maintainers and/or community. The quality of the videos is outstanding: they cover just enough detail, provide all the keystrokes used to help users reproduce the setup, and show common errors.

For my use case, one of the most important parts of Blender is its scripting capability. Blender is tightly intertwined with Python, in the sense that I can create a Python script and run it, and Blender will run through the steps in the script as if I had performed the equivalent manual clicks in the viewer. Let’s see a brief example of how this works in action, because over the course of my research, I often have found myself adding things manually in Blender’s viewer, then fetching the corresponding Python commands to be used for scripting later.

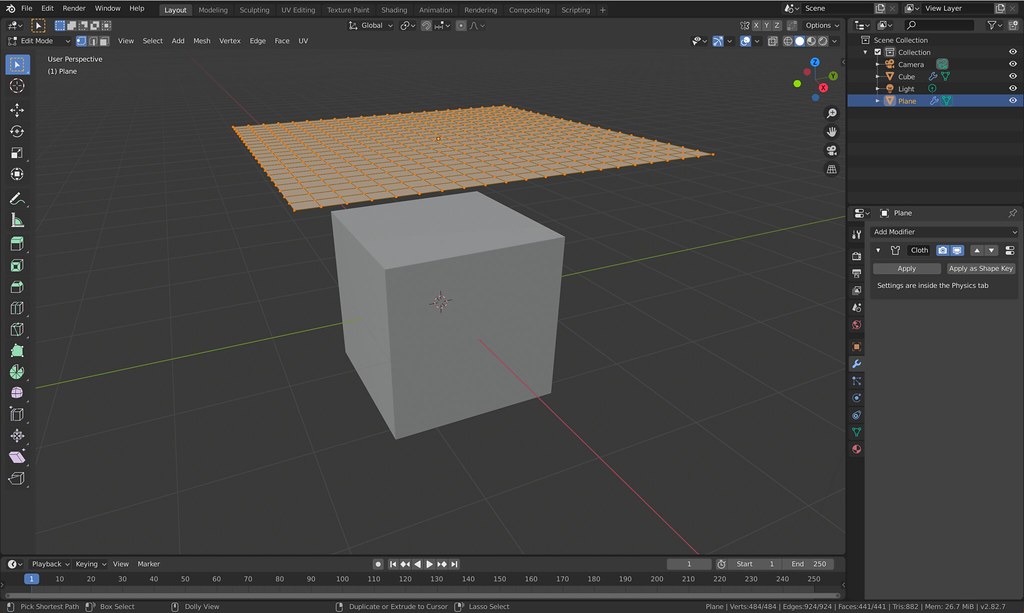

Let’s suppose we want to create a cloth that starts above the cube and falls on it. We can do this manually based on this excellent tutorial on cloth simulation. Inside Blender, I manually created a “plane” object, moved it above the cube, and sub-divided it by 15 to create a grid. Then, I added the cloth modifier. The result looks like this:

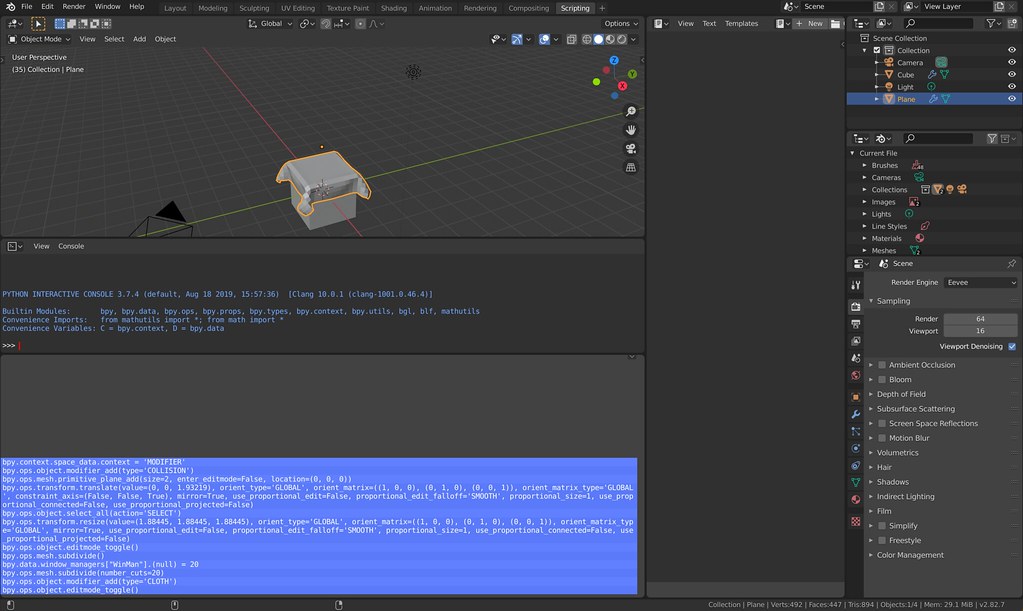

But how do we reproduce this example in a script? To do that, look at the Scripting tab, and the lower left corner window in it. This will show some of the Python commands (you’ll probably need to zoom in):

Unfortunately, there’s not always a perfect correspondence of the commands here and the commands that one has to actually put in a script to reproduce the scene. Usually there are commands missing from the Scripting tab that I need to include in my actual scripts in order to get them working properly. Conversely, some of the commands in the Scripting tab are irrelevant. I have yet to figure out a hard and fast rule, and rely on a combination of the Scripting tab, borrowing from older working scripts, and Googling stuff with “Blender Python” in my search commands.

From the above, I then created the following basic script:

# Must be imported to use much of Blender's functionality.

import bpy

# Add collision modifier to the cube (selected by default).

bpy.ops.object.modifier_add(type='COLLISION')

# Add a primitive plane (makes this the selected object). Add the translation

# method into the location to start above the cube.

bpy.ops.mesh.primitive_plane_add(size=2, enter_editmode=False, location=(0, 0, 1.932))

# Rescale the plane. (Could have alternatively adjusted the `size` above.)

# Ignore the other arguments because they are defaults.

bpy.ops.transform.resize(value=(1.884, 1.884, 1.884))

# Enter edit-mode to sub-divide the plane and to add the cloth modifier.

bpy.ops.object.editmode_toggle()

bpy.ops.mesh.subdivide(number_cuts=15)

bpy.ops.object.modifier_add(type='CLOTH')

# Go back to "object mode" by re-toggling edit mode.

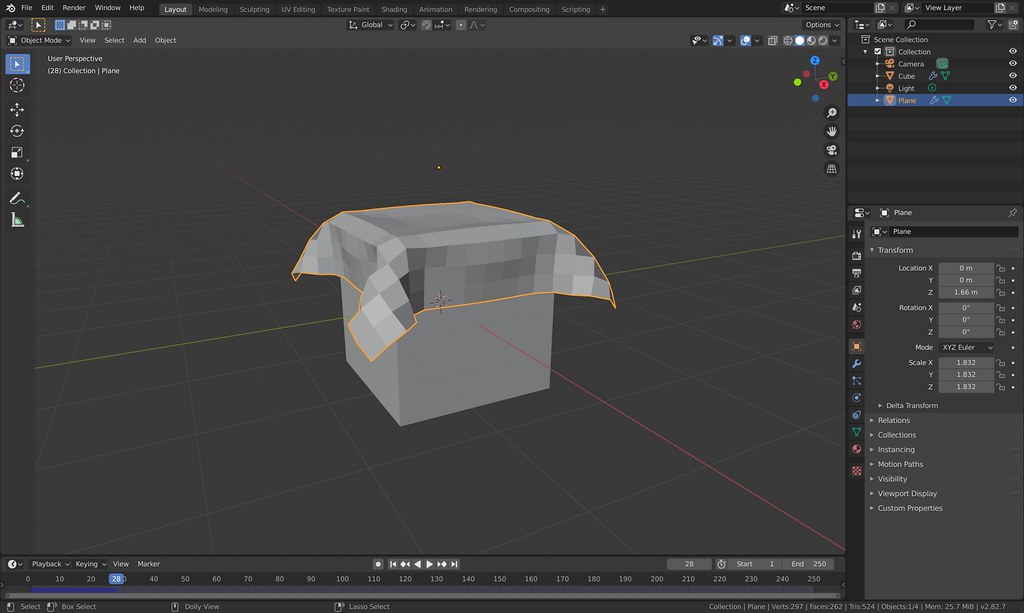

bpy.ops.object.editmode_toggle()If this Python file is called test-cloth.py then running blender -P

test-cloth.py will reproduce the setup. Clicking the “play” button at the

bottom results in the following after 28 frames:

Nice, is it? The cloth is “blocky” here, but there are modifiers that can and will make it smoother.

The Python command does not need to be done in a “virtualenv” because Blender uses its own Python. Please see this Blender StackExchange post for further details.

There’s obviously far more to Blender scripting, and I am only able to scratch the surface in this post. To give an idea of its capabilities, I have used Blender for the following three papers:

- Deep Imitation Learning of Sequential Fabric Smoothing From an Algorithmic Supervisor, which is currently under review and available as a preprint on arXiv.

- Visuospatial Foresight for Multi-Step, Multi-Task Fabric Manipulation, which will appear at RSS 2020 in a few weeks.

- Learning to Smooth and Fold Real Fabric Using Dense Object Descriptors Trained on Synthetic Color Images, which is currently under review and available as a preprint on arXiv.

The first two papers used Blender 2.79, whereas the third used Blender 2.80. The first two used Blender solely for generating (domain-randomized) images from cloth meshes imported from external software, whereas the third created cloth directly in Blender and used the software’s simulator.

In subsequent posts, I hope to focus more on Python scripting and the cloth simulator in Blender. I also want to review Blender’s strengths and weaknesses. For example, there are good reasons why the first two papers above did not use Blender’s built-in cloth simulator.

I hope this served as a concise introduction to Blender. As always, thank you for reading.

Early Summer Update

Hello everyone! Here’s a quick early summer update. I had the last few days off from research since it’s the end of the semester and a few days before I begin my remote summer internship at Google Brain. During my time off, I added a new photo album on Flickr based on my trip to Vietnam for the International Symposium on Robotics Research (ISRR) in October 2019. The album has almost 200 photos from my iPhone. I also made minor updates to my older blog posts about ISRR, which you can access in the archives, to include some featured photos.

I wanted to finish the album because going to Vietnam was one of my last major trips before the COVID-19 pandemic, and it’s one that I especially cherish among my entire travel history, because it brought me to a place I knew little about beyond reading books and news about the tragic Vietnam War. That’s one of the benefits of travel. It opens our eyes to new areas and cultures.

I also updated my earlier photo albums for some of the other conferences I attended. First, I only recently realized that my photos were private. Whoops! They should be visible now judging from my tests logging out of Flickr and checking the albums. Second, I used the Flickr “Organizr” edit setting to rearrange photos from some earlier albums to get them in order based on when I actually took the photos on my iPhone. For the ISRR 2019 album, the photos are already in order since I figured out a better way to upload photos. On my laptop, I open the Photos app, group all the photos in an album within Photos (not to be confused with an album in Flickr), and then click “File –> Export –> Export Photos.” This will make a copy of the photos on the local file system in my laptop. From there, I use Flickr’s upload feature, and order the photos alphabetically, which fortunately means the photos are in order since they are named based on numbers.

I have several other actionable items on my agenda, but admittedly these may have to be pushed back by many months. One is to improve this website design. As explained here, the blog has looked like this for over five years, and I want to experiment with changes to make the website more visually appealing. The problem is backwards compatibility: I’d need the website changes to be able to retain all my LaTeX, all my code formatting, and inevitably this means re-reading over 300 posts from the last nine years. Let me know if you have any suggestions in that regard.

As always, thanks for reading this blog. In addition, I hope you are safe, and are able to stay indoors as much as possible if you have the privilege of doing so. I hope that life will return to normal in the near future.

My Third Berkeley AI Research Blog Post

Hello everyone! My silence on this blog is because I was hard at work last month writing for another blog, the Berkeley AI Research (BAIR) Blog. Today, my collaborators and I just released a new post which describes our work in robotics and deformable object manipulation. As I’ve done with my past two BAIR Blog posts (here and here), I will mention a few words about it.

Our post is unusual in that it features papers from two different labs that didn’t formally collaborate on them. We feature four research papers in the post, two from Professor Pieter Abbeel’s lab and two from Professor Ken Goldberg’s lab. In case you’re wondering, no, we were not aware that we were working independently on these projects. I vividly remember submitting my fabric smoothing paper to arXiv back in September … and then, a few days later, seeing Lerrel Pinto (soon to be on the faculty at NYU) present us with results that were essentially what I had just showed in my paper! To be clear, it was a pleasant surprise, not an unwanted one. The more people working on the topic, the better.

Despite the focus on similar robotics tasks, the machine learning techniques we used were different. In fact, there’s an elegant, hierarchical way of categorizing our collective work. At the top, we have model-free versus model-based methods. They are further sub-divided into imitation learning versus reinforcement learning (for model-free methods) and image-space versus latent-space (for model-based methods). This neat split in our work fortunately made it easy for us to not only write this blog post – in the sense that the organization was clear from the start — but also to convey to the reader that there is no one way to approach a robotics problem. In fact, I would argue that the sign of being a true expert in one’s field is understanding the tradeoffs among various techniques that could, in theory, solve a certain problem.

I hope this post is an effective high-level introduction to the many ways we can approach robot learning problems.

In sum, here are the three BAIR Blog posts that I have written (comments are welcome):

- (August 2017) Minibatch Metropolis-Hastings, written with John Canny.

- (October 2018) Drilling Down on Depth Sensing and Deep Learning, written with Jeff Mahler, Mike Danielczuk, Matthew Matl, and Ken Goldberg.

- (May 2020), Four Novel Approaches to Manipulating Fabric using Model-Free and Model-Based Deep Learning in Simulation, written with Wilson Yan and Ryan Hoque.

All my posts took significant effort to write. I know I probably spend too much time blogging compared to what I “should” be doing as a typical PhD student, but I enjoy it too much to give it up. I plan to write at least one more blog post before graduation. At that point hopefully someone will magically appear out of thin air to take over the BAIR Blog maintenance duties from me …

As an extra bit of bonus information for reading my personal blog, here are some behind-the-scenes statistics about the BAIR Blog. First, let’s look at the number of subscribers:

Here, I show the growth in subscribers from May 2019 to April 2020. (We started the blog in July 2017.) At the time I took the screenshot, we have 5,878 subscribers. Of these, for any given email to subscribers to notify them of a new BAIR Blog post, about 41.0% will open the email, and then a further 6.8% of them will actually click on the link that we provide to the blog post. Not bad! I definitely think each BAIR blog post gets more attention than the average research paper.

Oh, and we have 536 subscribers that, for whatever reason, subscribed and then unsubscribed. What gives?!?

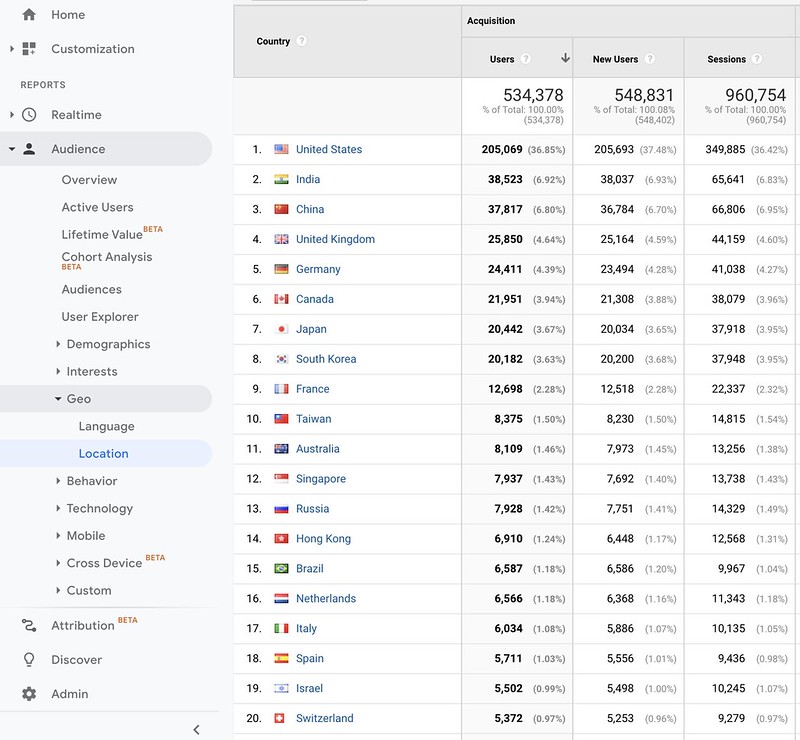

Now let’s switch over to page views, courtesy of Google Analytics. Here’s what I see when I list the countries of origin of our visitors, from the BAIR Blog’s entire history.

The United States is the clear leader here, with India and China the next two countries. If anything I’m surprised that the gap between the United States and India (or China) is that large. I think that Indian or Chinese citizens who access the blog while located in the United States get counted as a United States user. I’ll have to check how Google Analytics actually works here, but this seems to be the most logical conclusion.

The rest of the list also isn’t that surprising. Singapore and Hong Kong are showing, despite being the size of cities, that they have a large set of Artificial Intelligence enthusiasts.

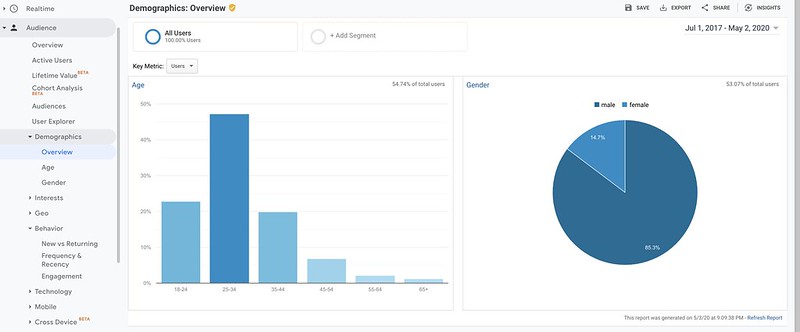

In terms of demographics, the BAIR Blog audience is estimated to be about 85% male, 15% female, as shown below. I know, we’re trying to work on this. (I frequently email BAIR students and postdocs requesting for blog posts, and I do this slightly more towards females to at least balance out the authorship.)

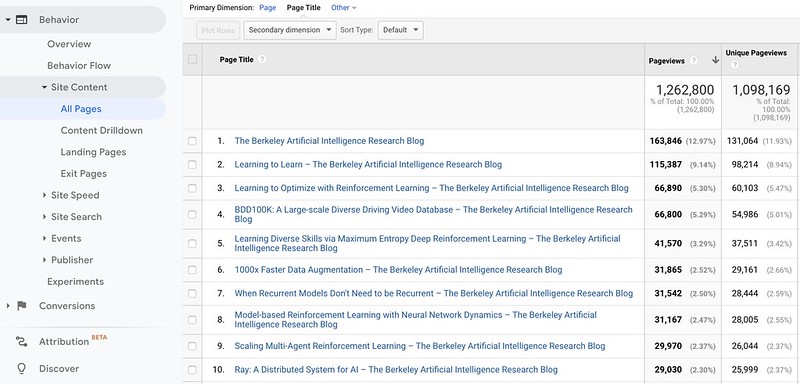

Here’s what happens when I look at the most popular blog posts and the page views from the beginning of the blog:

The most popular blog post by far is Chelsea Finn’s post about Model Agnostic Meta Learning (MAML), the wildly popular meta-learning algorithm for enabling deep neural networks to rapidly adapt to new tasks. Incidentally, that algorithm was a key reason why Finn landed a faculty position at Stanford. Most of the other popular posts are about (deep) reinforcement learning, which continues to be a Berkeley specialty. My first two blog posts are somewhat farther down the list, with about 10,000 page views for each. That’s still a respectable amount of views.

Well, I hope that was an interesting behind-the-scenes look at the BAIR Blog. Say, I should probably contact the maintainers of the Stanford AI Blog and the CMU Machine Learning Blog to see how much we’re dominating them in terms of subscriber count and page views …

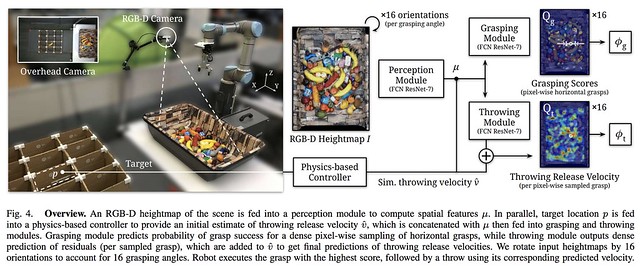

Fully Convolutional Neural Networks for Fast and Reliable Robotic Manipulation

The figure above, from the TossingBot

paper with caption included, shows an example of how to use fully convolutional

neural networks for robotic manipulation.

Given the COVID-19 situation and the “shelter-in-place” order in the Bay Area, I have been working remotely the last few weeks. The silver lining is that, because I recently wrapped up a bunch of projects, I was already planning to use my Spring Break (which was last week) for brainstorming new research projects, which is more suitable for remote work, and I am fortunate that my job affords that opportunity. Part of the brainstorming process involves plowing through research papers on my never-ending “TODO” list. So while working at home during a pandemic has not been as good for me as it was for Sir Isaac Newton back then, it has not been terrible. I was able to read through three papers (and re-read one paper) about robotic manipulation using fully convolutional neural networks.

In particular, this blog post will discuss these four recent robotics papers, which I abbreviate as follows (see the bottom of the post for a full set of citations):

- Pick-and-Place (at ICRA 2018 and in IJRR 2019)

- Pushing and Grasping Synergies (at IROS 2018)

- Tossing Bot (at RSS 2019)

- Form2Fit (at ICRA 2020)

I already dissected Form2Fit in a prior blog post but I will revisit the paper as it is highly related to the first three. This blog post will compare and contrast the techniques used in these four papers.

The papers specialize in image-based robotic manipulation, where decisions are made on the basis of dense, per-pixel calculations. We call these “dense” operations because they compute something for every pixel in an input image. For an example of a concept that involves dense operations, see my recent blog post on dense object descriptors.

In order to efficiently perform dense per-pixel operations, the authors employ Fully Convolutional Neural Networks (FCNs). For a refresher on these, you can read the massively influential CVPR 2015 paper or perhaps look at resources such as Stanford’s CS 231n class. While FCNs were originally developed for semantic segmentation tasks, the papers I discuss here show how FCNs can be used for robotic manipulation.

Well, what are these papers about, and how do they use FCNs?

First, the Pick-and-Place paper focuses on picking out cluttered items from a bin. Their system employs several FCNs (as we’ll see, using several streams is common) to map from an image of a workspace (i.e., a bin of objects) to a value between 0 and 1, which is called an “affordance.” Numbers closer to 1 are better. Affordances should not be interpreted as a probability, even though I often think of them that way, because the training labels are not determined by measuring a probability of success, but by a relative scale labeled by human users. There are four action primitives: two for suctioning, and two for grasping, and the exact type used is not learned but hard-coded via surface normals (for suctioning) or location near a bin edge (for grasping). To handle grasp rotations, the authors simply discretize rotation into 16 groups by cleverly rotating the input RGBD images, and then passing all the images in parallel through the FCN. Interestingly, the Appendix reports other modeling architectures, such as $n$ separate FCNs, but that was sample inefficient and also challenging to load in GPU memory. While this isn’t the focus of my blog post, they interestingly do a pick first, then recognize framework, rather than the reverse which is probably more common. So, their robot picks the grasped object, and runs a separate neural network to recognize it. The predicted image class then tells the robot where to stow the object.

Second, the Pushing and Grasping Synergies paper investigates how to simultaneously learn pushing and grasping actions to pick items from a workspace and put them in an external bin. The reason for learning pushing (and not just grasping) is that they consider a workspace with objects situated next to each other, so that pushing first, then grasping, to isolate objects is often a better strategy than grasping alone. The system uses model-free deep Q-learning to train two FCNs, one for pushing and the other for grasping, and training is entirely self supervised: the authors cleverly set a system so that the robot can dump a box of objects on the workspace, and then tries pushing and grasping actions. Eventually, it trains the two Q-networks well enough that they can be deployed in scenarios with novel objects. The paper says just 5.5 hours of real-world data training is needed.

Third, the TossingBot paper investigates how to train a robot to throw arbitrary objects into target bins. Why do this, beyond generating cool videos? Throwing increases the range of the robot’s reachability and it may increase picking speed. The paper explores the synergy between grasps and throws, and jointly learns the two primitives so that the robot performs grasps that enable good throws. (It reminds me of the synergy between pushing and placing from the prior paper!) The throwing part uses the idea of residual physics. It learns a velocity magnitude conditioned on visual information, and then adds that to the output from an analytical physics model. That physics model helps to generalize to different target bins, and provides a reasonable initial velocity estimate. The estimate is then corrected from the learned model, because it is hard to model the forces of aerodynamic drag. The results and videos are truly impressive.

Fourth, the Form2Fit paper focuses on assembling kits together with robots. While my prior blog post covers this in detail, to summarize here again, robotic kit assembly is done with a sequence of picking and placing actions. The “picking” uses a suctioning action, and we need a good suctioning action as a pre-requisite to getting good placing actions. Both picking and placing are represented as FCNs. However, there is a third module, called a match network (also a FCN) which uses descriptors to indicate correspondence. Why? To associate a suction location on the object to a placing location, and to change the orientation. As I implied in my prior post, imagine we didn’t have the matching network. What would happen? Initially, given a grasped object, there are many ways we can place it successfully. But eventually we have to be able to assemble the entire kit, so each object must be inserted just at the right spot, and not just anywhere with high probability, so that subsequent actions can correctly fill up the kit.

So, to recap, here’s the desired output of the FCNs, assuming that they have been sufficiently trained:

-

Pick-and-Place: affordance (not probability) values for suctioning and grasping action primitives. Affordance values are bounded within $[0,1]$, and higher numbers are better.

-

Grasping and Pushing Synergies: $Q(s,a)$ values, or the discounted sum of future rewards at this given image $s$ and taking action primitive $a$, under the robot’s target (not behavioral) policy.

-

TossingBot: the output of the grasping network is the probability of “grasping success” when grasping at any particular pixel. Be aware that the training signal depends on the subsequent throwing success. The throwing network, interestingly, outputs the desired velocity residual which is added to an initial velocity estimate from an analytical physics model.

-

Form2Fit: the output of the suction and place networks are the probabilities of the respective actions. The output of the match network is the dense object descriptor representation, which is of the same height and width as the input image but with a higher dimension, as they used $d=64$ channels. This is used to indicate correspondence among the suction and place actions.

In order to make those FCNs output desired values, we need to train them. How does the process of collecting labels and training work for each method?

-

Pick-and-Place: skilled and experienced human users must manually label the affordances. Thus, this is the only paper among the four here that does not employ automatic data labeling via trial and error. The human manually labels pixels as positive, negative, or neither, and then pixels with neither are trained with a loss value of 0 via backpropagation. The authors had to design an interface to make this feasible, and it has to be sparsely labeled to make this practical. The training data consists of fewer than 2000 of these manually labeled images, though this is surely before data augmentation. Interestingly, 2000 is roughly on the order of how many images I had for our bed-making paper.

-

Grasping and Pushing Synergies: the labels are implicit through reinforcement learning rewards. Their reward design is simple: a $+1$ for a successful grasp and a $+0.5$ for a successful push that “meaningfully changes the scene” — the latter requires a hard-coded threshold. Through model-free reinforcement learning and backpropagation, the FCNs updates the parameters such that their output computes the learned value function.

-

TossingBot: the robot collects data through trial-and-error, and the videos show how the system is set to be self-supervised to keep human intervention at a minimum. The grasping network is trained with throwing success, not grasping success. This is critical because the whole point of grasping is to enable good throws! Therefore, when I say “grasping success probability” it really should be interpreted as “probability that this grasp will be successful for a subsequent throw.” They automatically get this label by checking if the grasped image landed in the target box. For the throw, we first get the analytical estimate \(\|\hat{v}_{x,y}\|\) from physics equations conditioned on a known target spot. Then, we get the actual landing spot from overhead cameras, which I assume are similar to the ones for detecting throwing success, and can deduce the true residual from that.

- Form2Fit: the data collection here is a bit subtle, and covered in depth in my prior blog post. It’s clever and involves reversing the task, i.e., disassembly. It is easier to disassemble than assemble, and by doing this, the robot gets data points for training the picking and placing modules, and then training the dense object net to get the match module. Once again for a grasp point or suction point, we take a single pixel (actually, a radius around it) and then backpropagate through it.

Now that we have the FCNs, what actions should the robot take at each time step? This is generally straightforward once the FCNs have done their heavy duty task in getting per-pixel image numbers:

-

Pick-and-Place: given all the possible action primitives along with all the rotations, pick the single pixel with the highest affordance value, and execute that action. This involves a maximum operation over every single image output from the FCN (including a factor of 16x for rotations), and then a second maximum over pixels in them. That’s the idea, but in practice they employed some heuristics. One is “suction first then grasp,” which led them to artificially scale the suctioning affordance values. Another one is that if the robot repeatedly tries an action but does not affect the scene — a problem I’ve experienced in several research projects — then they decrease the affordances of the relevant pixels. It’s these little things that, though somewhat hacky, help maximize performance.

-

Grasping and Pushing Synergies: the action chosen is one that maximizes the Q-values. In other words, take the maximum over all the 32 possible images (16 for grasping, 16 for pushing) over all pixels within those images. That’s a lot to consider, but the computation is parallelized.

-

TossingBot: the pixel with the highest grasp probability (from the output of the grasping module) across all orientations is chosen for the grasp point. Then, the robot will toss using the corresponding predicted velocity, which is provided in the same pixel location and same orientation in the output image of the throwing module.

-

Form2Fit: the planner first samples a set of potential actions. It then uses the descriptors to see which pick-and-place pair has the lowest L2 distance in descriptor space, and chooses that action. This “minimize distance in descriptor space” is standard for many of the robotics and descriptors papers I read nowadays. It can be expensive to sample and evaluate so many actions, so it is necessary to tune the sampling frequency.

Overall, what do these papers suggest as the advantages of the FCN-based approach?

-

The technique is object-agnostic in that it does not make any assumptions about the kind of objects the robot might grasp.

-

FCNs are efficient for per-pixel calculations, and this is helpful when we want a label for every pixel in an input image. In addition, the resulting action is often a simple function of the FCN output, such as taking an “argmax” across the pixels, as mentioned earlier. Some other alternatives for data-driven robotic grasping, as covered in an earlier blog post, require sampling a set of image patches or running the Cross Entropy Method.

-

Their specific architectural choice of rotating the input image by 16, to represent 16 different rotations, means they do not need to consider rotation as part of the action, simplifying the primitive. In addition, by keeping the different rotations in one architecture, rather than splitting into 16 different networks or 16 different trunks, they can use weight sharing to improve generalization and training efficiency.

-

Since the output is of the same dimension of the input with per-pixel properties, one can debug and/or interpret the output by looking at a heat map to see which values are higher.

There is other work that uses FCNs for efficient grasping, such as one that came right out of our own AUTOLAB and was presented at ICRA 2019. That paper, interestingly, trained a Convolutional Neural Network and then converted it to a Fully Convolutional Neural Network, to avoid the manual labeling done in the Robotic Pick-and-Place paper.

If you are interested in learning how to accelerate training of affordance-based policies with FCNs, I refer you to an ICRA 2020 paper which argues for the benefits of visual pre-training based on passive data without robotic interaction. This means the subsequent fine-tuning on active data from interaction is significantly shorter.

Overall it seems like FCNs are a powerful ingredient in the machine learning and robotics toolbox, and can be combined with techniques such as reinforcement learning, dense object descriptors, self-supervision, and other techniques.

Here are the full citations of the papers I discussed:

-

Andy Zeng, Shuran Song, Kuan-Ting Yu, Elliott Donlon, Francois R. Hogan, Maria Bauza, Daolin Ma, Orion Taylor, Melody Liu, Eudald Romo, Nima Fazeli, Ferran Alet, Nikhil Chavan Dafle, Rachel Holladay, Isabella Morona, Prem Qu Nair, Druck Green, Ian Taylor, Weber Liu, Thomas Funkhouser, Alberto Rodriguez. Robotic Pick-and-Place of Novel Objects in Clutter with Multi-Affordance Grasping and Cross-Domain Image Matching. ICRA 2018, IJRR 2019.

-

Andy Zeng, Shuran Song, Stefan Welker, Johnny Lee, Alberto Rodriguez, Thomas Funkhouser. Learning Synergies between Pushing and Grasping with Self-supervised Deep Reinforcement Learning. IROS 2018.

-

Andy Zeng, Shuran Song, Johnny Lee, Alberto Rodriguez, Thomas Funkhouser. TossingBot: Learning to Throw Arbitrary Objects with Residual Physics. RSS 2019.

-

Kevin Zakka, Andy Zeng, Johnny Lee, Shuran Song. Form2Fit: Learning Shape Priors for Generalizable Assembly from Disassembly. ICRA 2020.

Thank you for reading, and stay safe.

My Interview with PyImageSearch's Sayak Paul

I’m pleased to share that my interview with Sayak Paul, who works at PyImageSearch, is now available to read over at his Medium blog. Here’s how he introduces me:

A warm welcome to Daniel Seita for today’s interview. Daniel is a computer science Ph.D. student at the University of California, Berkeley. His research interests broadly lie in areas like Artificial Intelligence, Robotics, and Deep Learning. He is deeply passionate about explaining technical insights and one such favorite insight of mine from Daniel’s archive is Understanding Generative Adversarial Networks. You can check out all of his blog pieces from here. He writes on a wide range of topics and has written more than 300 such pieces.

I was approach by Paul with a cold email, and agreed to do the interview for a number of reasons:

- I am honored that my blog posts have provided him insights.

- I was impressed by the wide range of inspiring people who Paul previously interviewed.

- I wanted to indirectly provide more support to PyImageSearch because that website has been a tremendously helpful resource for my research over the last few years.

To expand on the last point, PyImageSearch is incredible, filled with tutorial

after tutorial in such plain-spoken, clear language. I typically use it as a

reference on using OpenCV to adjust or annotate images, but PyImageSearch is

also helpful for Deep Learning more broadly. For example, literally

yesterday, I was learning how to write code using TensorFlow 2.0 with the new

eager execution (I usually use PyTorch). As part of my learning process, I

read the PyImageSearch articles on keras versus tf.keras and how to

use the new tf.GradientTape feature. I have not had to pay anything to

read these awesome resources, though I would be willing to do so.