My Third Berkeley AI Research Blog Post

Hello everyone! My silence on this blog is because I was hard at work last month writing for another blog, the Berkeley AI Research (BAIR) Blog. Today, my collaborators and I just released a new post which describes our work in robotics and deformable object manipulation. As I’ve done with my past two BAIR Blog posts (here and here), I will mention a few words about it.

Our post is unusual in that it features papers from two different labs that didn’t formally collaborate on them. We feature four research papers in the post, two from Professor Pieter Abbeel’s lab and two from Professor Ken Goldberg’s lab. In case you’re wondering, no, we were not aware that we were working independently on these projects. I vividly remember submitting my fabric smoothing paper to arXiv back in September … and then, a few days later, seeing Lerrel Pinto (soon to be on the faculty at NYU) present us with results that were essentially what I had just showed in my paper! To be clear, it was a pleasant surprise, not an unwanted one. The more people working on the topic, the better.

Despite the focus on similar robotics tasks, the machine learning techniques we used were different. In fact, there’s an elegant, hierarchical way of categorizing our collective work. At the top, we have model-free versus model-based methods. They are further sub-divided into imitation learning versus reinforcement learning (for model-free methods) and image-space versus latent-space (for model-based methods). This neat split in our work fortunately made it easy for us to not only write this blog post – in the sense that the organization was clear from the start — but also to convey to the reader that there is no one way to approach a robotics problem. In fact, I would argue that the sign of being a true expert in one’s field is understanding the tradeoffs among various techniques that could, in theory, solve a certain problem.

I hope this post is an effective high-level introduction to the many ways we can approach robot learning problems.

In sum, here are the three BAIR Blog posts that I have written (comments are welcome):

- (August 2017) Minibatch Metropolis-Hastings, written with John Canny.

- (October 2018) Drilling Down on Depth Sensing and Deep Learning, written with Jeff Mahler, Mike Danielczuk, Matthew Matl, and Ken Goldberg.

- (May 2020), Four Novel Approaches to Manipulating Fabric using Model-Free and Model-Based Deep Learning in Simulation, written with Wilson Yan and Ryan Hoque.

All my posts took significant effort to write. I know I probably spend too much time blogging compared to what I “should” be doing as a typical PhD student, but I enjoy it too much to give it up. I plan to write at least one more blog post before graduation. At that point hopefully someone will magically appear out of thin air to take over the BAIR Blog maintenance duties from me …

As an extra bit of bonus information for reading my personal blog, here are some behind-the-scenes statistics about the BAIR Blog. First, let’s look at the number of subscribers:

Here, I show the growth in subscribers from May 2019 to April 2020. (We started the blog in July 2017.) At the time I took the screenshot, we have 5,878 subscribers. Of these, for any given email to subscribers to notify them of a new BAIR Blog post, about 41.0% will open the email, and then a further 6.8% of them will actually click on the link that we provide to the blog post. Not bad! I definitely think each BAIR blog post gets more attention than the average research paper.

Oh, and we have 536 subscribers that, for whatever reason, subscribed and then unsubscribed. What gives?!?

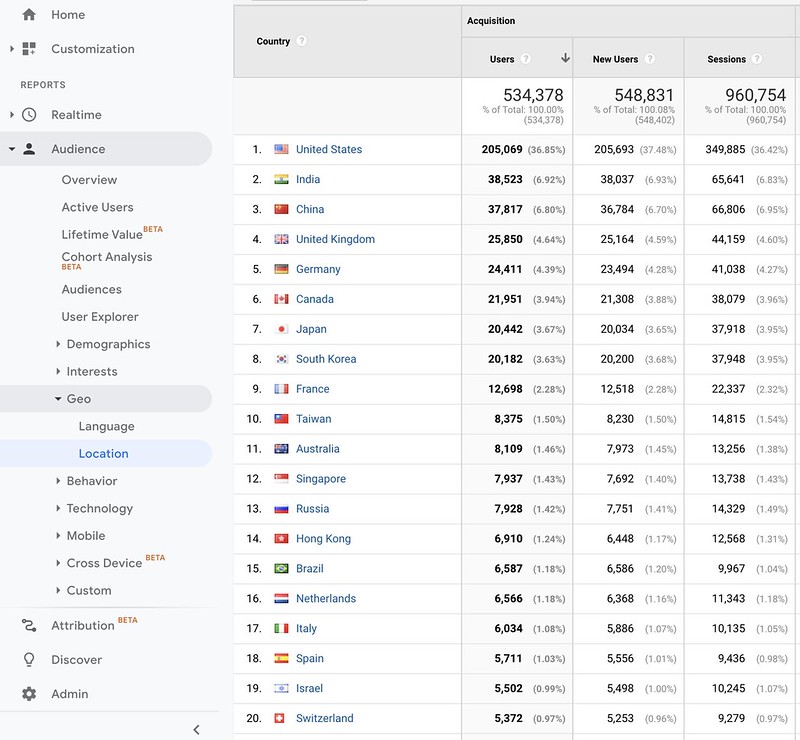

Now let’s switch over to page views, courtesy of Google Analytics. Here’s what I see when I list the countries of origin of our visitors, from the BAIR Blog’s entire history.

The United States is the clear leader here, with India and China the next two countries. If anything I’m surprised that the gap between the United States and India (or China) is that large. I think that Indian or Chinese citizens who access the blog while located in the United States get counted as a United States user. I’ll have to check how Google Analytics actually works here, but this seems to be the most logical conclusion.

The rest of the list also isn’t that surprising. Singapore and Hong Kong are showing, despite being the size of cities, that they have a large set of Artificial Intelligence enthusiasts.

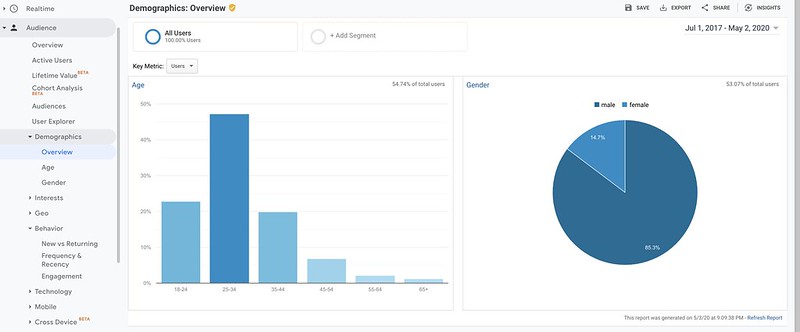

In terms of demographics, the BAIR Blog audience is estimated to be about 85% male, 15% female, as shown below. I know, we’re trying to work on this. (I frequently email BAIR students and postdocs requesting for blog posts, and I do this slightly more towards females to at least balance out the authorship.)

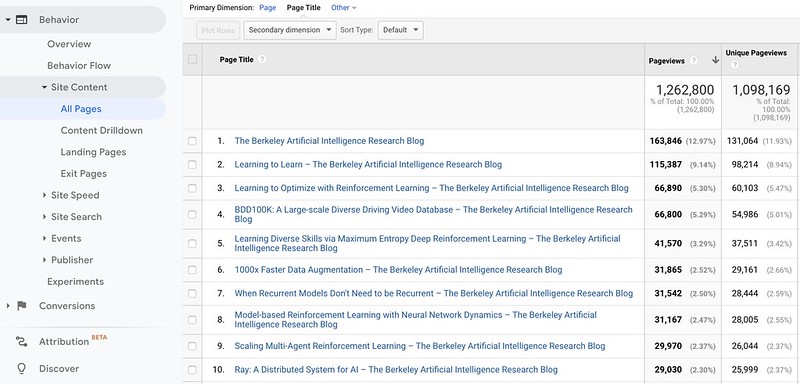

Here’s what happens when I look at the most popular blog posts and the page views from the beginning of the blog:

The most popular blog post by far is Chelsea Finn’s post about Model Agnostic Meta Learning (MAML), the wildly popular meta-learning algorithm for enabling deep neural networks to rapidly adapt to new tasks. Incidentally, that algorithm was a key reason why Finn landed a faculty position at Stanford. Most of the other popular posts are about (deep) reinforcement learning, which continues to be a Berkeley specialty. My first two blog posts are somewhat farther down the list, with about 10,000 page views for each. That’s still a respectable amount of views.

Well, I hope that was an interesting behind-the-scenes look at the BAIR Blog. Say, I should probably contact the maintainers of the Stanford AI Blog and the CMU Machine Learning Blog to see how much we’re dominating them in terms of subscriber count and page views …