Fully Convolutional Neural Networks for Fast and Reliable Robotic Manipulation

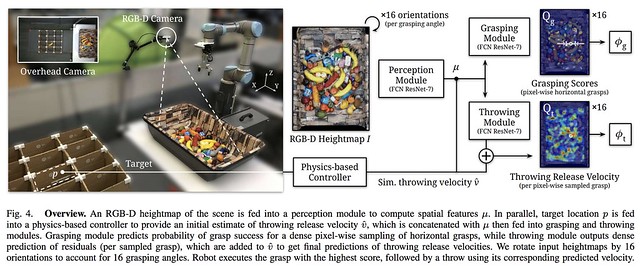

The figure above, from the TossingBot

paper with caption included, shows an example of how to use fully convolutional

neural networks for robotic manipulation.

Given the COVID-19 situation and the “shelter-in-place” order in the Bay Area, I have been working remotely the last few weeks. The silver lining is that, because I recently wrapped up a bunch of projects, I was already planning to use my Spring Break (which was last week) for brainstorming new research projects, which is more suitable for remote work, and I am fortunate that my job affords that opportunity. Part of the brainstorming process involves plowing through research papers on my never-ending “TODO” list. So while working at home during a pandemic has not been as good for me as it was for Sir Isaac Newton back then, it has not been terrible. I was able to read through three papers (and re-read one paper) about robotic manipulation using fully convolutional neural networks.

In particular, this blog post will discuss these four recent robotics papers, which I abbreviate as follows (see the bottom of the post for a full set of citations):

- Pick-and-Place (at ICRA 2018 and in IJRR 2019)

- Pushing and Grasping Synergies (at IROS 2018)

- Tossing Bot (at RSS 2019)

- Form2Fit (at ICRA 2020)

I already dissected Form2Fit in a prior blog post but I will revisit the paper as it is highly related to the first three. This blog post will compare and contrast the techniques used in these four papers.

The papers specialize in image-based robotic manipulation, where decisions are made on the basis of dense, per-pixel calculations. We call these “dense” operations because they compute something for every pixel in an input image. For an example of a concept that involves dense operations, see my recent blog post on dense object descriptors.

In order to efficiently perform dense per-pixel operations, the authors employ Fully Convolutional Neural Networks (FCNs). For a refresher on these, you can read the massively influential CVPR 2015 paper or perhaps look at resources such as Stanford’s CS 231n class. While FCNs were originally developed for semantic segmentation tasks, the papers I discuss here show how FCNs can be used for robotic manipulation.

Well, what are these papers about, and how do they use FCNs?

First, the Pick-and-Place paper focuses on picking out cluttered items from a bin. Their system employs several FCNs (as we’ll see, using several streams is common) to map from an image of a workspace (i.e., a bin of objects) to a value between 0 and 1, which is called an “affordance.” Numbers closer to 1 are better. Affordances should not be interpreted as a probability, even though I often think of them that way, because the training labels are not determined by measuring a probability of success, but by a relative scale labeled by human users. There are four action primitives: two for suctioning, and two for grasping, and the exact type used is not learned but hard-coded via surface normals (for suctioning) or location near a bin edge (for grasping). To handle grasp rotations, the authors simply discretize rotation into 16 groups by cleverly rotating the input RGBD images, and then passing all the images in parallel through the FCN. Interestingly, the Appendix reports other modeling architectures, such as $n$ separate FCNs, but that was sample inefficient and also challenging to load in GPU memory. While this isn’t the focus of my blog post, they interestingly do a pick first, then recognize framework, rather than the reverse which is probably more common. So, their robot picks the grasped object, and runs a separate neural network to recognize it. The predicted image class then tells the robot where to stow the object.

Second, the Pushing and Grasping Synergies paper investigates how to simultaneously learn pushing and grasping actions to pick items from a workspace and put them in an external bin. The reason for learning pushing (and not just grasping) is that they consider a workspace with objects situated next to each other, so that pushing first, then grasping, to isolate objects is often a better strategy than grasping alone. The system uses model-free deep Q-learning to train two FCNs, one for pushing and the other for grasping, and training is entirely self supervised: the authors cleverly set a system so that the robot can dump a box of objects on the workspace, and then tries pushing and grasping actions. Eventually, it trains the two Q-networks well enough that they can be deployed in scenarios with novel objects. The paper says just 5.5 hours of real-world data training is needed.

Third, the TossingBot paper investigates how to train a robot to throw arbitrary objects into target bins. Why do this, beyond generating cool videos? Throwing increases the range of the robot’s reachability and it may increase picking speed. The paper explores the synergy between grasps and throws, and jointly learns the two primitives so that the robot performs grasps that enable good throws. (It reminds me of the synergy between pushing and placing from the prior paper!) The throwing part uses the idea of residual physics. It learns a velocity magnitude conditioned on visual information, and then adds that to the output from an analytical physics model. That physics model helps to generalize to different target bins, and provides a reasonable initial velocity estimate. The estimate is then corrected from the learned model, because it is hard to model the forces of aerodynamic drag. The results and videos are truly impressive.

Fourth, the Form2Fit paper focuses on assembling kits together with robots. While my prior blog post covers this in detail, to summarize here again, robotic kit assembly is done with a sequence of picking and placing actions. The “picking” uses a suctioning action, and we need a good suctioning action as a pre-requisite to getting good placing actions. Both picking and placing are represented as FCNs. However, there is a third module, called a match network (also a FCN) which uses descriptors to indicate correspondence. Why? To associate a suction location on the object to a placing location, and to change the orientation. As I implied in my prior post, imagine we didn’t have the matching network. What would happen? Initially, given a grasped object, there are many ways we can place it successfully. But eventually we have to be able to assemble the entire kit, so each object must be inserted just at the right spot, and not just anywhere with high probability, so that subsequent actions can correctly fill up the kit.

So, to recap, here’s the desired output of the FCNs, assuming that they have been sufficiently trained:

-

Pick-and-Place: affordance (not probability) values for suctioning and grasping action primitives. Affordance values are bounded within $[0,1]$, and higher numbers are better.

-

Grasping and Pushing Synergies: $Q(s,a)$ values, or the discounted sum of future rewards at this given image $s$ and taking action primitive $a$, under the robot’s target (not behavioral) policy.

-

TossingBot: the output of the grasping network is the probability of “grasping success” when grasping at any particular pixel. Be aware that the training signal depends on the subsequent throwing success. The throwing network, interestingly, outputs the desired velocity residual which is added to an initial velocity estimate from an analytical physics model.

-

Form2Fit: the output of the suction and place networks are the probabilities of the respective actions. The output of the match network is the dense object descriptor representation, which is of the same height and width as the input image but with a higher dimension, as they used $d=64$ channels. This is used to indicate correspondence among the suction and place actions.

In order to make those FCNs output desired values, we need to train them. How does the process of collecting labels and training work for each method?

-

Pick-and-Place: skilled and experienced human users must manually label the affordances. Thus, this is the only paper among the four here that does not employ automatic data labeling via trial and error. The human manually labels pixels as positive, negative, or neither, and then pixels with neither are trained with a loss value of 0 via backpropagation. The authors had to design an interface to make this feasible, and it has to be sparsely labeled to make this practical. The training data consists of fewer than 2000 of these manually labeled images, though this is surely before data augmentation. Interestingly, 2000 is roughly on the order of how many images I had for our bed-making paper.

-

Grasping and Pushing Synergies: the labels are implicit through reinforcement learning rewards. Their reward design is simple: a $+1$ for a successful grasp and a $+0.5$ for a successful push that “meaningfully changes the scene” — the latter requires a hard-coded threshold. Through model-free reinforcement learning and backpropagation, the FCNs updates the parameters such that their output computes the learned value function.

-

TossingBot: the robot collects data through trial-and-error, and the videos show how the system is set to be self-supervised to keep human intervention at a minimum. The grasping network is trained with throwing success, not grasping success. This is critical because the whole point of grasping is to enable good throws! Therefore, when I say “grasping success probability” it really should be interpreted as “probability that this grasp will be successful for a subsequent throw.” They automatically get this label by checking if the grasped image landed in the target box. For the throw, we first get the analytical estimate \(\|\hat{v}_{x,y}\|\) from physics equations conditioned on a known target spot. Then, we get the actual landing spot from overhead cameras, which I assume are similar to the ones for detecting throwing success, and can deduce the true residual from that.

- Form2Fit: the data collection here is a bit subtle, and covered in depth in my prior blog post. It’s clever and involves reversing the task, i.e., disassembly. It is easier to disassemble than assemble, and by doing this, the robot gets data points for training the picking and placing modules, and then training the dense object net to get the match module. Once again for a grasp point or suction point, we take a single pixel (actually, a radius around it) and then backpropagate through it.

Now that we have the FCNs, what actions should the robot take at each time step? This is generally straightforward once the FCNs have done their heavy duty task in getting per-pixel image numbers:

-

Pick-and-Place: given all the possible action primitives along with all the rotations, pick the single pixel with the highest affordance value, and execute that action. This involves a maximum operation over every single image output from the FCN (including a factor of 16x for rotations), and then a second maximum over pixels in them. That’s the idea, but in practice they employed some heuristics. One is “suction first then grasp,” which led them to artificially scale the suctioning affordance values. Another one is that if the robot repeatedly tries an action but does not affect the scene — a problem I’ve experienced in several research projects — then they decrease the affordances of the relevant pixels. It’s these little things that, though somewhat hacky, help maximize performance.

-

Grasping and Pushing Synergies: the action chosen is one that maximizes the Q-values. In other words, take the maximum over all the 32 possible images (16 for grasping, 16 for pushing) over all pixels within those images. That’s a lot to consider, but the computation is parallelized.

-

TossingBot: the pixel with the highest grasp probability (from the output of the grasping module) across all orientations is chosen for the grasp point. Then, the robot will toss using the corresponding predicted velocity, which is provided in the same pixel location and same orientation in the output image of the throwing module.

-

Form2Fit: the planner first samples a set of potential actions. It then uses the descriptors to see which pick-and-place pair has the lowest L2 distance in descriptor space, and chooses that action. This “minimize distance in descriptor space” is standard for many of the robotics and descriptors papers I read nowadays. It can be expensive to sample and evaluate so many actions, so it is necessary to tune the sampling frequency.

Overall, what do these papers suggest as the advantages of the FCN-based approach?

-

The technique is object-agnostic in that it does not make any assumptions about the kind of objects the robot might grasp.

-

FCNs are efficient for per-pixel calculations, and this is helpful when we want a label for every pixel in an input image. In addition, the resulting action is often a simple function of the FCN output, such as taking an “argmax” across the pixels, as mentioned earlier. Some other alternatives for data-driven robotic grasping, as covered in an earlier blog post, require sampling a set of image patches or running the Cross Entropy Method.

-

Their specific architectural choice of rotating the input image by 16, to represent 16 different rotations, means they do not need to consider rotation as part of the action, simplifying the primitive. In addition, by keeping the different rotations in one architecture, rather than splitting into 16 different networks or 16 different trunks, they can use weight sharing to improve generalization and training efficiency.

-

Since the output is of the same dimension of the input with per-pixel properties, one can debug and/or interpret the output by looking at a heat map to see which values are higher.

There is other work that uses FCNs for efficient grasping, such as one that came right out of our own AUTOLAB and was presented at ICRA 2019. That paper, interestingly, trained a Convolutional Neural Network and then converted it to a Fully Convolutional Neural Network, to avoid the manual labeling done in the Robotic Pick-and-Place paper.

If you are interested in learning how to accelerate training of affordance-based policies with FCNs, I refer you to an ICRA 2020 paper which argues for the benefits of visual pre-training based on passive data without robotic interaction. This means the subsequent fine-tuning on active data from interaction is significantly shorter.

Overall it seems like FCNs are a powerful ingredient in the machine learning and robotics toolbox, and can be combined with techniques such as reinforcement learning, dense object descriptors, self-supervision, and other techniques.

Here are the full citations of the papers I discussed:

-

Andy Zeng, Shuran Song, Kuan-Ting Yu, Elliott Donlon, Francois R. Hogan, Maria Bauza, Daolin Ma, Orion Taylor, Melody Liu, Eudald Romo, Nima Fazeli, Ferran Alet, Nikhil Chavan Dafle, Rachel Holladay, Isabella Morona, Prem Qu Nair, Druck Green, Ian Taylor, Weber Liu, Thomas Funkhouser, Alberto Rodriguez. Robotic Pick-and-Place of Novel Objects in Clutter with Multi-Affordance Grasping and Cross-Domain Image Matching. ICRA 2018, IJRR 2019.

-

Andy Zeng, Shuran Song, Stefan Welker, Johnny Lee, Alberto Rodriguez, Thomas Funkhouser. Learning Synergies between Pushing and Grasping with Self-supervised Deep Reinforcement Learning. IROS 2018.

-

Andy Zeng, Shuran Song, Johnny Lee, Alberto Rodriguez, Thomas Funkhouser. TossingBot: Learning to Throw Arbitrary Objects with Residual Physics. RSS 2019.

-

Kevin Zakka, Andy Zeng, Johnny Lee, Shuran Song. Form2Fit: Learning Shape Priors for Generalizable Assembly from Disassembly. ICRA 2020.

Thank you for reading, and stay safe.